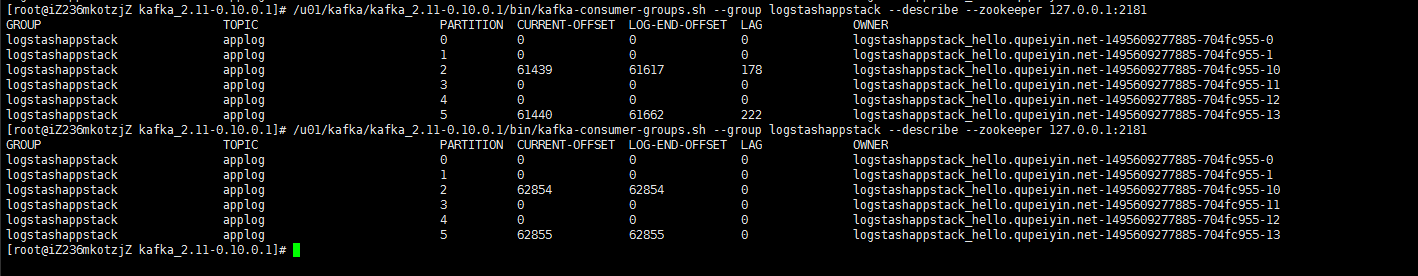

我在kafka定义了一个 topic 默认 6 个分片 1个副本。分布在三个节点 但是向它写入数据,它只用了两个分片存储数据。 不管怎么调都不行,真是不解。

这是它报出的一些 INFO 信息。 我不太明白是否和这个问题相关。

[2017-05-24 14:57:53,462] INFO Partition [applog,5] on broker 1: No checkpointed highwatermark is found for partition [applog,5] (kafka.cluster.Partition)

[2017-05-24 14:57:53,484] INFO Completed load of log applog-2 with log end offset 0 (kafka.log.Log)

[2017-05-24 14:57:53,485] INFO Created log for partition [applog,2] in /usr/local/kafka/kafka_2.11-0.10.0.1/data with properties {compression.type -> producer, message.format.version -> 0.10.0-IV1, file.delete.delay.ms -> 60000, max.message.bytes -> 1000012, message.timestamp.type -> CreateTime, min.insync.replicas -> 1, segment.jitter.ms -> 0, preallocate -> false, min.cleanable.dirty.ratio -> 0.5, index.interval.bytes -> 4096, unclean.leader.election.enable -> true, retention.bytes -> -1, delete.retention.ms -> 86400000, cleanup.policy -> delete, flush.ms -> 9223372036854775807, segment.ms -> 604800000, segment.bytes -> 1073741824, retention.ms -> 216000000, message.timestamp.difference.max.ms -> 9223372036854775807, segment.index.bytes -> 10485760, flush.messages -> 9223372036854775807}. (kafka.log.LogManager)

[2017-05-24 14:57:53,492] INFO Partition [applog,2] on broker 1: No checkpointed highwatermark is found for partition [applog,2] (kafka.cluster.Partition)

[2017-05-24 15:04:52,832] INFO [Group Metadata Manager on Broker 1]: Removed 0 expired offsets in 0 milliseconds. (kafka.coordinator.GroupMetadataManager)

[2017-05-24 15:14:52,831] INFO [Group Metadata Manager on Broker 1]: Removed 0 expired offsets in 0 milliseconds. (kafka.coordinator.GroupMetadataManager)

前提是topic的分区不是后来扩展的。如果是后来扩展的,这就正常了

1.检查集群的状态,看看对应的分区上的leader是否正常。并到对应的节点上看看kafka系统日志是否有异常。

2.如果还是不行,建议重启相应的kafka节点。

3.最终不行,就要做次迁移来激活平衡了。https://www.orchome.com/565

"前提是topic的分区不是后来扩展的。如果是后来扩展的,这就正常了"

如果后期扩展了,导致数据分片使用不均匀该如何处理呢,最近正好遇到这个问题。

后期扩展,生产者会发送到新的分区上,没问题的。

其实是存储不均匀,没事的。

大佬,有个问题啊我的环境是3zk,3broker的kafka集群,但是当我关掉一个节点之后,kafka的under replicaed就是变100%,而且我把topic由原来的单副本增加到3副本了,但是我手动leader选举还是失败,日志却显示已经我是leader了

[2020-08-08 19:06:21,462] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-34 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,462] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-15 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,463] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-12 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,464] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-9 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,464] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-28 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,465] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition fluentbit.kube-0 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,466] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-6 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,466] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-16 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,467] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-22 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger) [2020-08-08 19:06:21,468] INFO [Broker id=0] Skipped the become-leader state change after marking its partition as leader with correlation id 13 from controller 2 epoch 22 for partition __consumer_offsets-3 (last update controller epoch 22) since it is already the leader for the partition. (state.change.logger)key.hashCode() % numberOfPartitions,你的key是不是基本固定的?消息的key决定会发到哪个分片你的答案