server.properties 配置:

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see kafka.server.KafkaConfig for additional details and defaults

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

############################# Socket Server Settings #############################

# The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://保密:19092

# Hostname and port the broker will advertise to producers and consumers. If not set,

# it uses the value for "listeners" if configured. Otherwise, it will use the value

# returned from java.net.InetAddress.getCanonicalHostName().

# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=3

# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600

############################# Log Basics #############################

# A comma separated list of directories under which to store log files

log.dirs=/home/gateway/environment/kafka_2.12-2.8.0/data

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1

############################# Internal Topic Settings #############################

# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

############################# Log Flush Policy #############################

# Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis.

# The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000

# The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000

############################# Log Retention Policy #############################

# The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log.

# The minimum age of a log file to be eligible for deletion due to age

# 目前保留72小时,但是去年的数据都还在,没被删除,不清楚为啥没生效

log.retention.hours=72

# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=60000

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=localhost:2181

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=18000

############################# Group Coordinator Settings #############################

# The following configuration specifies the time, in milliseconds, that the GroupCoordinator will delay the initial consumer rebalance.

# The rebalance will be further delayed by the value of group.initial.rebalance.delay.ms as new members join the group, up to a maximum of max.poll.interval.ms.

# The default value for this is 3 seconds.

# We override this to 0 here as it makes for a better out-of-the-box experience for development and testing.

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0

一开始配置的是日志压缩(compact),后来改为了删除(delete),但是两者好像都没启到作用,参数配置我服务都会重启下,但是过了2天看了下磁盘还是占用那么多。

随便找了一个Topic的数据文件夹:

- 清理日志:

[2023-03-03 22:57:59,408] INFO Cleaner 0: Beginning cleaning of log __consumer_offsets-7. (kafka.log.LogCleaner)

[2023-03-03 22:57:59,408] INFO Cleaner 0: Building offset map for __consumer_offsets-7... (kafka.log.LogCleaner)

[2023-03-03 22:57:59,438] INFO Cleaner 0: Building offset map for log __consumer_offsets-7 for 1 segments in offset range [423171511, 424550948). (kafka.log.LogCleaner)

[2023-03-03 22:58:00,112] INFO Cleaner 0: Offset map for log __consumer_offsets-7 complete. (kafka.log.LogCleaner)

[2023-03-03 22:58:00,112] INFO Cleaner 0: Cleaning log __consumer_offsets-7 (cleaning prior to Fri Mar 03 22:57:45 CST 2023, discarding tombstones prior to Thu Mar 02 03:12:35 CST 2023)... (kafka.log.LogCleaner)

[2023-03-03 22:58:00,113] INFO Cleaner 0: Cleaning LogSegment(baseOffset=0, size=5775, lastModifiedTime=1677713245727, largestRecordTimestamp=Some(1676529831943)) in log __consumer_offsets-7 into 0 with deletion horizon 1677697955381, retaining deletes. (kafka.log.LogCleaner)

[2023-03-03 22:58:00,113] INFO Cleaner 0: Cleaning LogSegment(baseOffset=421792074, size=7373, lastModifiedTime=1677784355381, largestRecordTimestamp=Some(1677784355381)) in log __consumer_offsets-7 into 0 with deletion horizon 1677697955381, retaining deletes. (kafka.log.LogCleaner)

[2023-03-03 22:58:00,114] INFO Cleaner 0: Swapping in cleaned segment LogSegment(baseOffset=0, size=5775, lastModifiedTime=1677784355381, largestRecordTimestamp=Some(1676529831943)) for segment(s) List(LogSegment(baseOffset=0, size=5775, lastModifiedTime=1677713245727, largestRecordTimestamp=Some(1676529831943)), LogSegment(baseOffset=421792074, size=7373, lastModifiedTime=1677784355381, largestRecordTimestamp=Some(1677784355381))) in log Log(dir=/home/gateway/environment/kafka_2.12-2.8.0/data/__consumer_offsets-7, topic=__consumer_offsets, partition=7, highWatermark=424551142, lastStableOffset=424551142, logStartOffset=0, logEndOffset=424551142) (kafka.log.LogCleaner)

[2023-03-03 22:58:00,115] INFO Cleaner 0: Cleaning LogSegment(baseOffset=423171511, size=104851433, lastModifiedTime=1677855465314, largestRecordTimestamp=Some(1677855465314)) in log __consumer_offsets-7 into 423171511 with deletion horizon 1677697955381, retaining deletes. (kafka.log.LogCleaner)

[2023-03-03 22:58:00,779] INFO Cleaner 0: Swapping in cleaned segment LogSegment(baseOffset=423171511, size=7373, lastModifiedTime=1677855465314, largestRecordTimestamp=Some(1677855465314)) for segment(s) List(LogSegment(baseOffset=423171511, size=104851433, lastModifiedTime=1677855465314, largestRecordTimestamp=Some(1677855465314))) in log Log(dir=/home/gateway/environment/kafka_2.12-2.8.0/data/__consumer_offsets-7, topic=__consumer_offsets, partition=7, highWatermark=424551239, lastStableOffset=424551239, logStartOffset=0, logEndOffset=424551239) (kafka.log.LogCleaner)

[2023-03-03 22:58:00,779] INFO [kafka-log-cleaner-thread-0]:

Log cleaner thread 0 cleaned log __consumer_offsets-7 (dirty section = [423171511, 424550948])

100.0 MB of log processed in 1.4 seconds (72.9 MB/sec).

Indexed 100.0 MB in 0.7 seconds (142.0 Mb/sec, 51.3% of total time)

Buffer utilization: 0.0%

Cleaned 100.0 MB in 0.7 seconds (149.9 Mb/sec, 48.7% of total time)

Start size: 100.0 MB (1,379,535 messages)

End size: 0.0 MB (98 messages)

100.0% size reduction (100.0% fewer messages)

(kafka.log.LogCleaner)

看不到有错误日志,没有头绪。

生产环境就是每天24小时都会不停的生产数据,Topic相同,都是"gpsdata",服务器有多个消费者组订阅去后入库。难道一直占用这个Topic,就不会被自动清理数据了吗?

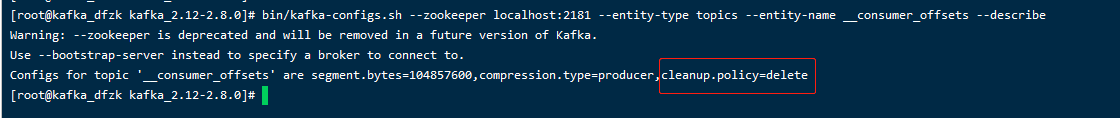

1、

__consumer_offsets这种topic,是存储消费者的offset,默认是压缩,不要关闭。2、

gpsdata很多消费者在消费,也不会影响正常清理。3、

log.retention.hours=72的策略没有问题。4、尝试使用命令行消费数据,从头开始消费,看看最早的数据是否是3天内的。

5、Windows运行一般删除需要管理员权限,是否要以管理员的方式运行kafka。

kafka是在Centos7上运行的。因为不止我这边的程序在订阅这些数据,同事也订阅了,测试的时候估计用了很多消费者组,也许就任意消费了一些数据,消费者如果拉取不当会对这个日志清理策略有影响吗?

根据您这边的建议,通过如下的命令,我拉取到的数据是去年11月份的,也就是和当前文件夹中最老的数据一致的。

./kafka-console-consumer.sh --bootstrap-server xxxx --topic gpsdata --from-beginning以下是拉取到的数据:

{"gnsscs":"0.0","jllsh":74,"ljzxsc":746487,"rhdwsfyx":2,"wgsj":1667959174000,"wxdwhb":"495.0","wxdwjd":"103.959009","wxdwwd":"30.66726","wxdwzt":1,"wxsl":30} {"gnsscs":"4.14","jllsh":0,"ljzxsc":3478417,"rhdwsfyx":2,"wgsj":1667959173000,"wxdwhb":"518.8","wxdwjd":"104.009276","wxdwwd":"30.675806","wxdwzt":1,"wxsl":30}这个数据里面有时间戳,我转换了下是去年的数据。

配置文件没生效吧,只剩这种情况了:

1、配置文件没有指定正确。

2、改完之后没有重启kafka节点。

配置文件肯定对的,相对绝对路径我都试了。改完Kafka和Zookeeper两个都重启了,还是没用。

因为正式环境一直在生产数据,而且Topic一直用的都是gpsdata,那么是不是由于一直在生产数据,导致Kafka这边判断一直没有去删除呢,它是会根据.timeindex文件找到过期的数据进行删除吗?

从没见过数据被标记为deleted的情况。

不会的,kafka是基于消息的时间戳,滚动删除的。

如果你都能确认,你这个情况我用了那么多年第一次遇到。

谢谢你了,哥,后续我再慢慢研究下。暂时我就通过命令标记删除,这样试了下过1分钟就自动删除掉了,缺点就是必须得保证生产的数据,消费者都拉完了,因为一下子全删完了。

./kafka-topics.sh --delete --zookeeper 127.0.0.1:2181 --topic gpsdata很暴力,等你研究的结果额

你的答案